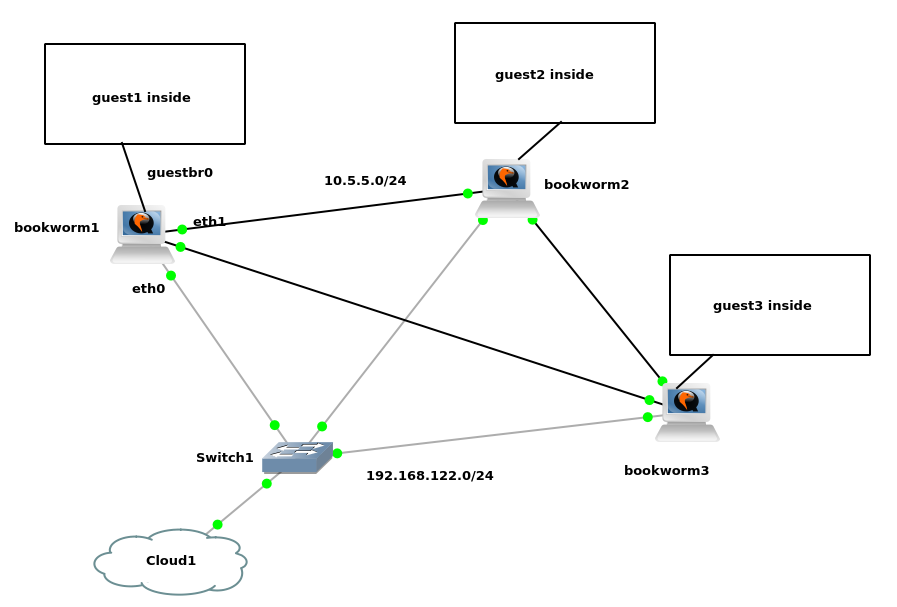

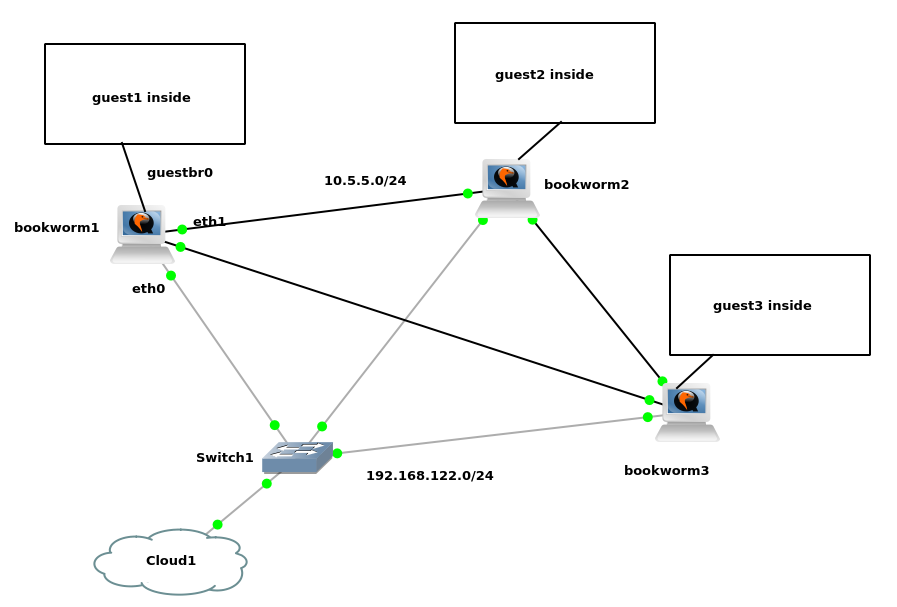

now that the basic poc works fine, we are gonna proceed with its virtualizatoin-flavored version (be it KVM or XEN, it doesn’t matter). the difference is that the user nodes are not standalone VPCS devices, but virtualized guests instead, which live on their respective virtualization type I hypervisor, while being connected to a linux bridge on the host system.

in fact, not much changes here. the layer2 architecture basically remains the same. the only differences are:

eth2 link gets released as the guest lives inside the node instead of a standalone vpcsguestbr0 bridge now goes on a vlaneth1.100 + eth2.100 instead of a bare cross-over on eth1 – this means we absolutely need to enable STP on that very bridge

we have a three node cluster and nodes are connected using eth1<-->eth2 direct links across each other

(STP is enabled on the internal bridges using those links, namely br0 and guestbr0).

the xenbr0 bridge is perimeter and without STP.

yeah sorry, we call it xenbr0 no matter what kind of hypervisor we have there.

bridge name bridge id STP enabled interfaces

guestbr0 8000.9e08bc8d4fcd yes eth1.100

eth2.100

xenbr0 8000.020000000000 no eth0

we are adding GNS3 device bookworm3 with a private base MAC address within

node3 02:00:00:00:00:00

also increase the RAM on the nodes from 256 MB to 1024 MB, as those become virtualization hosts

we are setting up the user vlan

echo 8021q >> /etc/modules modprobe 8021q

define vlans and the entirely different layer 2 topology e.g. on node1

vi /etc/network/interfaces

auto eth0

iface eth0 inet manual

auto eth1

iface eth1 inet manual

iface eth1.100 inet manual

vlan-raw-device eth1

auto eth2

iface eth2 inet manual

iface eth2.100 inet manual

vlan-raw-device eth2

auto xenbr0

iface xenbr0 inet static

bridge_ports eth0

bridge_hw eth0

# node1

address 192.168.122.11/24

gateway 192.168.122.1

auto guestbr0

iface guestbr0 inet static

bridge_ports eth1.100 eth2.100

bridge_stp on

bridge_hw eth1.100

# duplicate on all three nodes

address 10.5.5.254/24

and here’s on the other nodes

# node2

address 192.168.122.12/24

# node3

address 192.168.122.13/24

static name resolution is always good

vi /etc/hosts 192.168.122.13 bookworm3

replicate the setup from poc1 incl. SNAT on node3

table ip nat {

chain postrouting {

ip saddr 10.5.5.0/24 oif xenbr0 snat 192.168.122.13;

...

table netdev filter {

chain egress {

arp saddr ether 02:00:00:00:00:01 drop

...

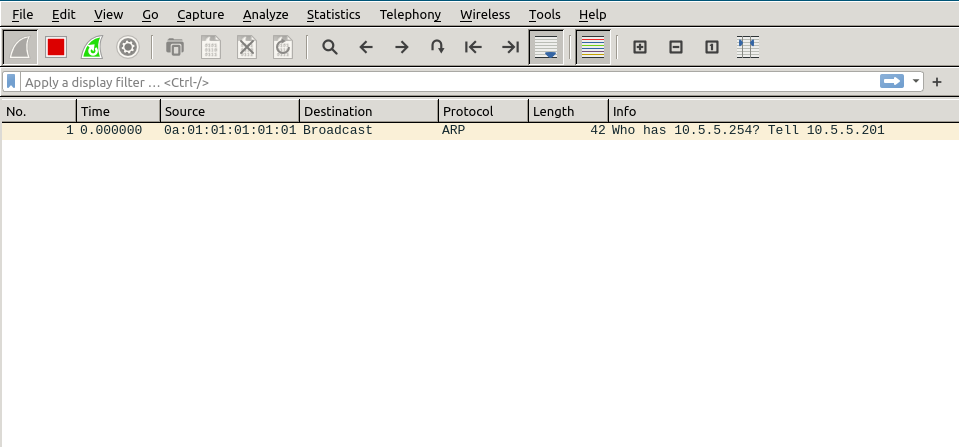

sniff all three link between the KVM hosts and arp resolve the gateway from a nested guest

from guest1 within bookworm1

ifconfig eth0 10.5.5.201/24 up ping -c3 10.5.5.254 ping -c3 opendns.com arp -a

we’re all good, it still works as with poc1 – there’s no echo reply from the foreign KVM host gateways

also the users can still reach each other

from guest3 within bookworm3

ifconfig eth0 10.5.5.203/24 up ping -c3 10.5.5.201

https://wiki.debian.org/BridgeNetworkConnections