tested with NetBSD 7 (2017/18)

this is the first flavor of this setup:

wd0ewd0f,g==> each node provides a fault-tolerant folder

node1.TODO automate the installation process (rebuild netbsd-INSTALL)

disklabel wd0 disklabel wd0 > wd0.dist cp -pi wd0.dist wd0.new disklabel -l vi wd0.new

on node1 adding partitions,

# size offset fstype [fsize bsize cpg/sgs] a: 8389584 2048 4.2BSD 2048 16384 0 # (Cyl. 2*- 8325*) b: 2097648 8391632 swap # (Cyl. 8325*- 10406*) c: 500116144 2048 unused 0 0 # (Cyl. 2*- 496148) d: 500118192 0 unused 0 0 # (Cyl. 0 - 496148) e: 8388608 10489280 RAID f: 8388608 18877888 Version 7 g: 8388608 27266496 Version 7

on nodes 2,3, adding partitions,

# size offset fstype [fsize bsize cpg/sgs] a: 20972448 2048 4.2BSD 2048 16384 0 # (Cyl. 2*- 20808*) b: 16556400 20974496 swap # (Cyl. 20808*- 37233*) c: 500116144 2048 unused 0 0 # (Cyl. 2*- 496148) d: 500118192 0 unused 0 0 # (Cyl. 0 - 496148) e: 8388608 37530896 RAID f: 8388608 45919504 Version 7 g: 8388608 54308112 Version 7

applying,

disklabel -R -r wd0 wd0.new disklabel wd0

offset 8391632 + swap size 2097648 = offset 10489280offset 20974496 + swap size 16556400 = offset 375308964096 MiB * 1024 * 1024 / 512 bytes per sector = 8388608 blocksVersion 7 fstype just for fun, as this is informative only, as the f/bsizes are toosource: https://www.netbsd.org/gallery/advocacy/jdf/flyer-netbsd-switchinglinux_en.pdf

so I suppose I will address the character device from /etc/iscsi/targets.

cp -pi /etc/iscsi/auths /etc/iscsi/auths.dist cp -pi /etc/iscsi/targets /etc/iscsi/targets.dist vi /etc/iscsi/targets # extent file or device start length extent0 /dev/rwd0f 0 4GB extent1 /dev/rwd0g 0 4GB # target flags storage netmask target1.0 rw extent0 192.168.0.0/24 target1.1 rw extent1 192.168.0.0/24 #target2.0 rw extent0 192.168.0.0/24 #target2.1 rw extent1 192.168.0.0/24 #target3.0 rw extent0 192.168.0.0/24 #target3.1 rw extent1 192.168.0.0/24

TODO LUN MASKING INSTEAD OF PLAYING WITH THE INITIATORS

size, see NetBSD 7.1 TARGETS(5).the default block size is 512 bytes.

cp -pi /etc/rc.conf /etc/rc.conf.dist vi /etc/rc.conf

iscsi_target=yes iscsi_target_flags=“”

/etc/rc.d/iscsi_target start

cp -pi /etc/hosts /etc/hosts.dist vi /etc/hosts 192.168.0.84 node1 192.168.0.82 node2 192.168.0.83 node3 iscsid #iscsictl add_send_target -a node1 iscsictl add_send_target -a node2 iscsictl add_send_target -a node3 iscsictl refresh_targets iscsictl list_targets iscsictl login -P 2 # target2.0 iscsictl login -P 6 # target3.0 #iscsictl login -P 2 # target1.0 #iscsictl login -P 8 # target3.1 #iscsictl login -P 4 # target1.1 #iscsictl login -P 8 # target2.1 iscsictl list_sessions dmesg | tail

for disk in rsd0d rsd1d; do

dd if=/dev/zero of=/dev/$disk bs=1024k count=1

done; unset disk

disklabel -r -e -I sd0

label: target2.0

#label: target1.0

#label: target1.1

a: 8388608 0 RAID

disklabel -r -e -I sd1

label: target3.0

#label: target3.1

#label: target2.1

a: 8388608 0 RAID

iscsictl list_sessions | grep :target

disklabel sd0 | grep ^label

disklabel sd1 | grep ^label

Now that the three partitions incl. rwd0e are ready, setup your RAID-5 array e.g. for one row, three columns (three disks) and no spare disk,

vi /var/tmp/iscsiraid.conf START array 1 3 0 START disks /dev/wd0e /dev/sd0a /dev/sd1a START layout 128 1 1 5 START queue fifo 100

TODO check block size

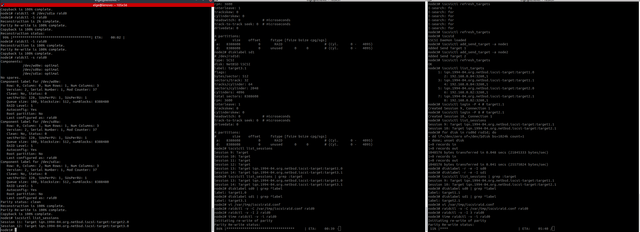

raidctl -v -C /var/tmp/iscsiraid.conf raid0 raidctl -v -I 1 raid0 #raidctl -v -I 2 raid0 #raidctl -v -I 3 raid0 time raidctl -v -i raid0 #raidctl -S raid0 raidctl -A no raid0 raidctl -s raid0

dd if=/dev/zero of=/dev/rraid0d bs=1024k count=1 disklabel raid0 newfs /dev/rraid0a #newfs -O 2 -b 64k /dev/rraid0a cat >> /etc/fstab <<-EOF #/dev/raid0a /raid0 ffs rw 1 2 EOF mkdir /raid0 touch /raid0/NOT_MOUNTED mount /dev/raid0a /raid0 echo ok > /raid0/OK-NODE1 #echo ok > /raid0/OK-NODE2 #echo ok > /raid0/OK-NODE3

top-down,

raidctl -s raid0 raidctl -S raid0 raidctl -p raid0

check what disks you are seeing,

disklabel -r -F sd0 | grep ^label disklabel -r -F sd1 | grep ^label

check that you are really seeing them,

hexdump -C -n 16 /dev/rwd0e hexdump -C -n 16 /dev/rsd0a hexdump -C -n 16 /dev/rsd1a

check the initiator sessions with,

iscsictl list_sessions -c

when a remote disk is not accessible anymore (getting input/output error), you need to regain the very same device again, e.g. sd0. Sometimes an iscsictl logout -I SESSIONID is enough to free the slot, sometimes it is not, and doing pkill iscsid helps. However, this kill both of your sessions, which is far from ideal for a RAID-5 array.

tail -F /var/log/messages & raidctl -v -c /var/tmp/iscsiraid.conf raid0 #raidctl -v -C /var/tmp/iscsiraid.conf raid0

Make sure the component is available (see above DISK IS GONE) then,

raidctl -v -R /dev/sd0a raid0

raidctl -v -p raid0 #raidctl -v -P raid0

Avoid rebooting the nodes because it will shut your targets down, provoquing other arrays to go wrong. And if you do, make sure you have setup the shutdown init scripts as described. To avoid reboots, you also need some kind of job scheduling, namely no automatic startup of the initiator services. When the machine boots-up, only the targets are loaded and eventually the iscsi initiator daemon, but not the LUNs.

vi /root/bin/start-initiators

#!/bin/ksh

print starting iscsid: \\c #self verbose

/sbin/iscsid

print pinging node1... \\c

ping -c1 node1 >/dev/null && print done || exit 1

print pinging node2... \\c

ping -c1 node2 >/dev/null && print done || exit 1

print pinging node3... \\c

ping -c1 node3 >/dev/null && print done || exit 1

#/sbin/iscsictl add_send_target -a node1

/sbin/iscsictl add_send_target -a node2

/sbin/iscsictl add_send_target -a node3

/sbin/iscsictl refresh_targets

/sbin/iscsictl list_targets

/sbin/iscsictl login -P 2 # target2.0

/sbin/iscsictl login -P 6 # target3.0

#/sbin/iscsictl login -P 2 # target1.0

#/sbin/iscsictl login -P 8 # target3.1

#/sbin/iscsictl login -P 4 # target1.1

#/sbin/iscsictl login -P 8 # target2.1

/sbin/iscsictl list_sessions

disklabel -r -F sd0 | grep ^label

disklabel -r -F sd1 | grep ^label

vi /root/bin/mount-root

#!/bin/ksh

raidctl -v -c /var/tmp/iscsiraid.conf raid0 && \

fsck_ffs /dev/rraid0a && \

mount_ffs /dev/raid0a /raid0

cp -pi /etc/rc.local /etc/rc.local.dist

vi /etc/rc.local

/sbin/iscsid && echo -n ' iscsid'

#(new file)

vi /etc/rc.shutdown.local

echo -n unmounting /raid0/...

umount /raid0 && print done

echo -n unconfiguring raid0...

raidctl -v -u raid0 && print done

sessions=`/sbin/iscsictl list_sessions | awk '{print $2}' | cut -f1 -d:`

for session in $sessions; do

echo -n logging out from session $session:

#self

/sbin/iscsictl logout -I $session

done; unset session

echo -n killing iscsid...

pkill iscsid && echo done

ln -s ../../etc/rc.shutdown.local /root/bin/rc.shutdown.local

dsh -e /sbin/raidctl -S raid0

dsh -e ls -alhF /raid0/

vi /root/bin/check-health

#!/bin/ksh

PATH=/sbin:/bin:/usr/sbin:/usr/bin

hexdump -C -n 16 /dev/rwd0e >/dev/null || print FAILED: cannot access wd0

hexdump -C -n 16 /dev/rsd0a >/dev/null || print FAILED: cannot access sd0

hexdump -C -n 16 /dev/rsd1a >/dev/null || print FAILED: cannot access sd1

optimal=`raidctl -s raid0 | grep optimal$ | wc -l`

parity=`raidctl -p raid0 | awk '{print $NF}'`

(( $optimal == 3 )) || print array raid0 not optimal

[[ $parity = clean ]] || print parity is not clean

dsh -e -s /root/bin/check-health

Check that the bits and pieces are in place,

dsh -e grep iscsi /etc/rc.conf dsh -e ls -l /etc/rc.local dsh -e grep ksh /etc/rc.d/local dsh -e ls -l /root/bin/start-initiators dsh -e ls -l /root/bin/mount-raid dsh -e ls -l /etc/rc.shutdown.local